Content tagged neural-network

Tags

Months

AWS has recently introduced the P3 instances. They come with Tesla V100 GPUs, so I decided to run a little benchmark to see how well they perform compared to my workstation (GeForce 1080 Ti) when training neural networks.

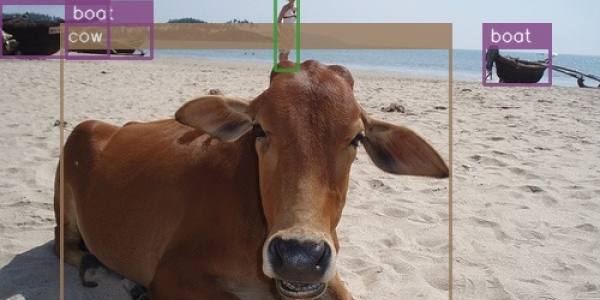

There was no understandable and straightforward implementation of SSD in TensorFlow, so I decided to make one. The original paper assumes familiarity with related research, so I needed to plow through several additional papers and a ton of source code to understand what's going on here. This post is an attempt to provide all that missing context in one place.

I have recently stumbled upon two articles on running TensorFlow on CPU setups and decided to check how well that works for the models I use. The results were somewhat unexpected.

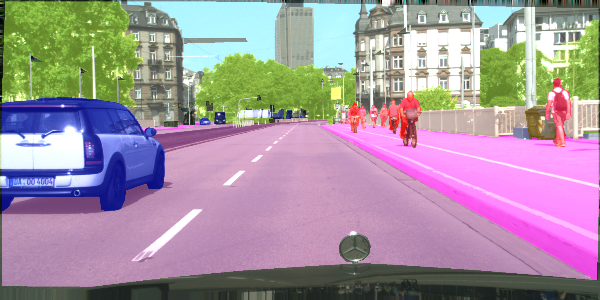

Semantic segmentation is a process of dividing an image into sets of pixels sharing similar properties and assigning one of the pre-defined labels to each of these sets. Or, in other words, you get a picture, and you're supposed to tell which pixels constitute a car and which constitute the pedestrians. Fun stuff.

You get to drive a car in a game capturing some images and the corresponding steering angle. You then use that data to build a neural network that spits out a steering angle for an input image such that the computer can drive the same car in the same game. The computer learns how to drive from you, hence behavioral cloning.