Content tagged computer-vision

Tags

Months

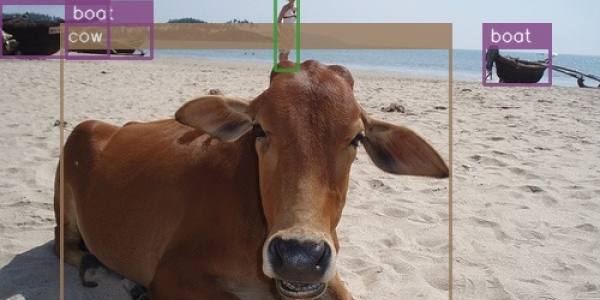

There was no understandable and straightforward implementation of SSD in TensorFlow, so I decided to make one. The original paper assumes familiarity with related research, so I needed to plow through several additional papers and a ton of source code to understand what's going on here. This post is an attempt to provide all that missing context in one place.

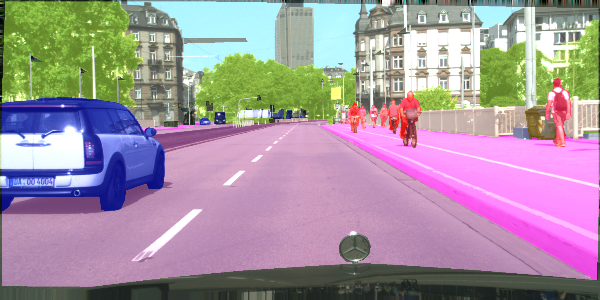

Semantic segmentation is a process of dividing an image into sets of pixels sharing similar properties and assigning one of the pre-defined labels to each of these sets. Or, in other words, you get a picture, and you're supposed to tell which pixels constitute a car and which constitute the pedestrians. Fun stuff.

In this post, I am playing with some classical computer vision algorithms and Support Vector Machines to see where the lane lines and other vehicles are in a video taken by a front-facing camera in a car driving on a highway.