Content from 2016-03

Tags

Months

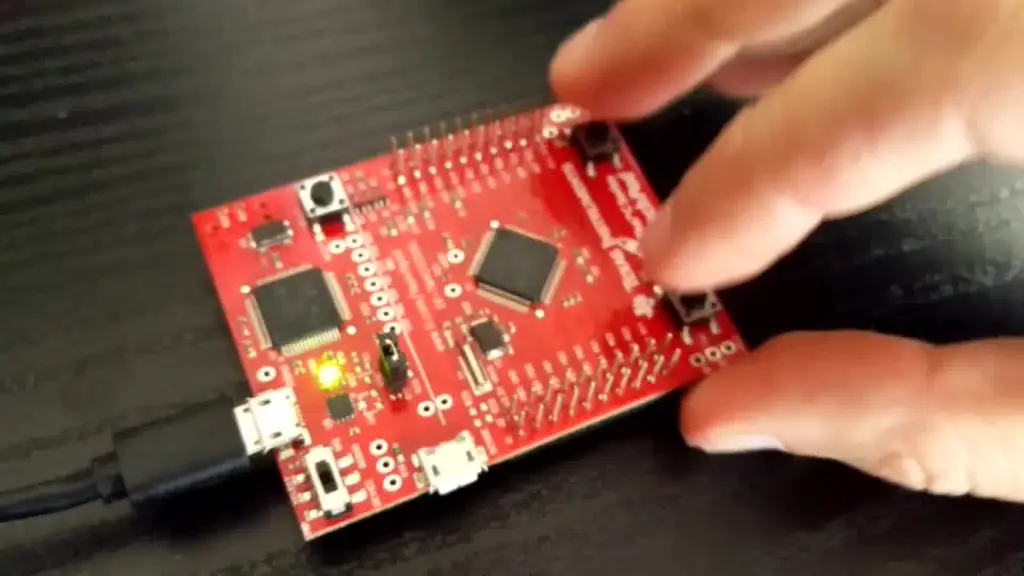

I have decided to build a simple Alien Invader game to learn more about microcontrollers. It will use Tiva as a base and a bunch of simple peripherals for I/O. This post describes a convenient development environment without any proprietary fluff.

Final thoughts. What's next?

What do you do when you need to signal state changes between threads? Condition variables are likely what you need. Futexes are general enough to be helpful here as well.

Chapter seven deals with read-write locks. They come in handy when many readers may access a resource mutated by relatively few writers.

Linux allows for assigning priorities to tasks and scheduling their execution according to various strategies. It has implications for locking, where the thread's priority may change after it has acquired a mutex. We deal with all that here.

Chapter five discusses cancelation. Threads may be canceled either synchronously or asynchronously. When they are canceled, they can call cleanup handlers. The process is quite involved and invokes some heavy Linux machinery.