Content tagged cuda

Tags

Months

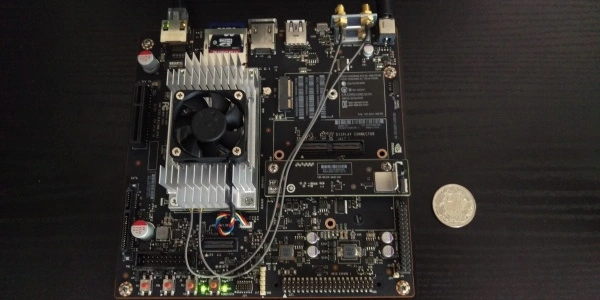

Some time ago, I wrote a post on building TensorFlow on a Jetson TX1. It worked eventually, but it was relatively slow because it's an edge device and it's not very powerful. It's way faster to compile it on a PC and copy the binaries to the target board. Here's how.

This post is an update to the previous post discussing a newer version of TensorFlow.

I need to run my neural network models on this board, so I need TensorFlow to run on it. I had expected a smooth ride, but it turned out to be quite an adventure and not one of a pleasant kind. Here's a how-to, so you don't have to waste time figuring it out yourself.