Content tagged linux

Tags

Months

The NAS odyssey continues. The disks work, and we need to put them together in a reasonably optimal RAID array way. It necessitates some play with low-level formatting, block and stripe sizes of the RAID, and making sure that the filesystem settings don't mess everything up.

We have an ARM board that will run the show, and we need to have the bootloader, the trusted firmware, and Linux run on it. Once Linux runs, the disks need to spin when needed, shut up when not, and the transition between these states must be glitch-free. This post describes how to make that happen.

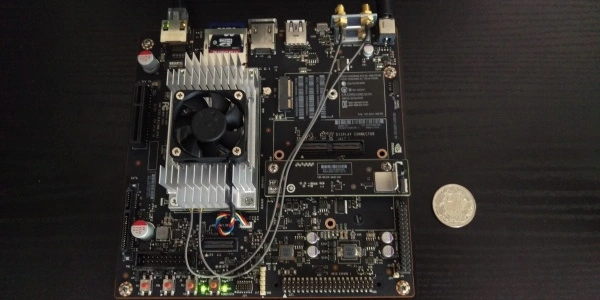

There are many pretty great ARM boards out there, but the stock firmware for most of them is ridden with binary blobs and is generally an abandonware. Fortunately, it's usually not that hard to make Debian work on most of them, including all the necessary multimedia peripherals.

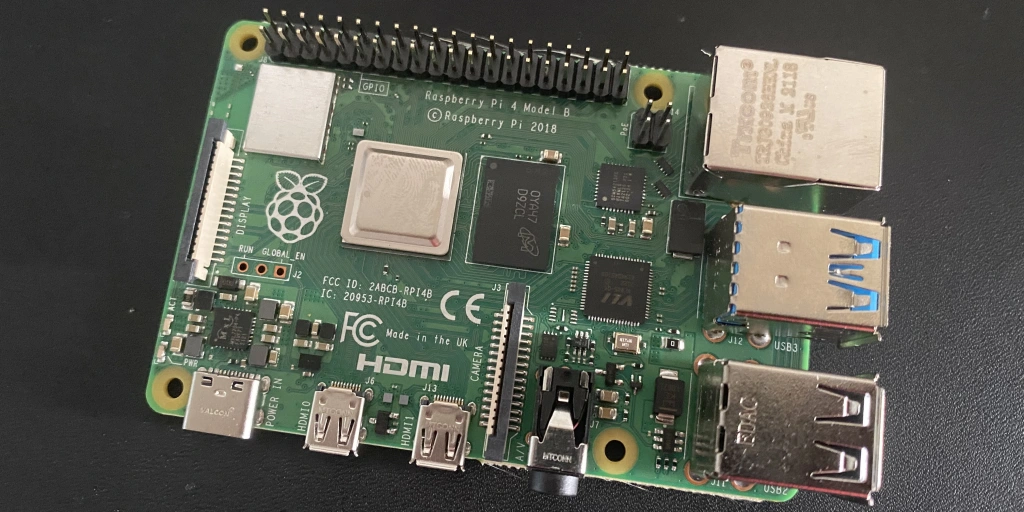

It's been quite a long while since the 64-bit CPUs took over the world. Most of the ecosystem moved on, but some of it didn't. The official OS for the RaspberryPi is one of the laggards, and it has started to be a problem. This post describes how to install the arm64 flavor of Debian on the device.

I need to run my neural network models on this board, so I need TensorFlow to run on it. I had expected a smooth ride, but it turned out to be quite an adventure and not one of a pleasant kind. Here's a how-to, so you don't have to waste time figuring it out yourself.

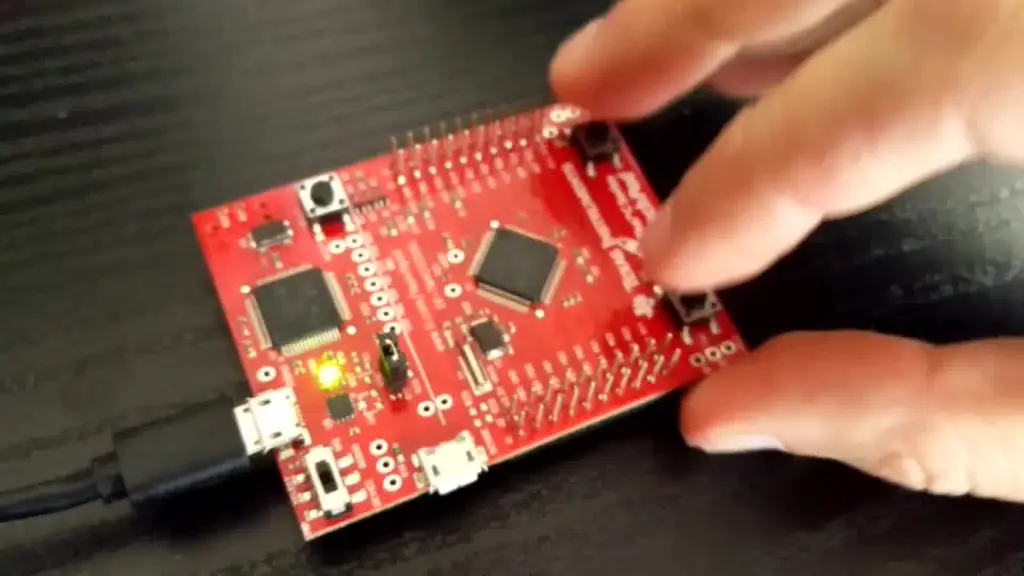

I have a love-hate relationship with developing software for embedded systems. On the one hand, it's loads of fun. On the other, there seem to be lots of closed-up magic proprietary blobs required to make things work. The same can usually be achieved with pure open source if you put some time and understanding into it. This post describes an open-source template for FRDM-K64F.

This post revisits the game project some half a year after the game already worked. The game used timers to handle asynchronicity, which was pretty messy. This post introduces an RTOS, complete with multiprocessing, a scheduler, and semaphores.

I have licenses for those fancy commercial math and simulation programs, and I need to use them occasionally. Somehow, I have developed an aversion to letting non-open-source software access my system and home directory, so I decided to use Docker to contain all that stuff. The challenge comes with handling graphics and sound. This post shows how to provide X and PulseAudio servers in Docker to run GUI apps.

Making the UEFI installation of the UP Board boot Debian and making Debian drive the multimedia peripherals.

The last thing we need is some randomness for the game not to be boring. We will build a Linear Congruential Generator that reads out the timer register on the first button event to get a seed. We will also need a game engine so that things don't get too messy.

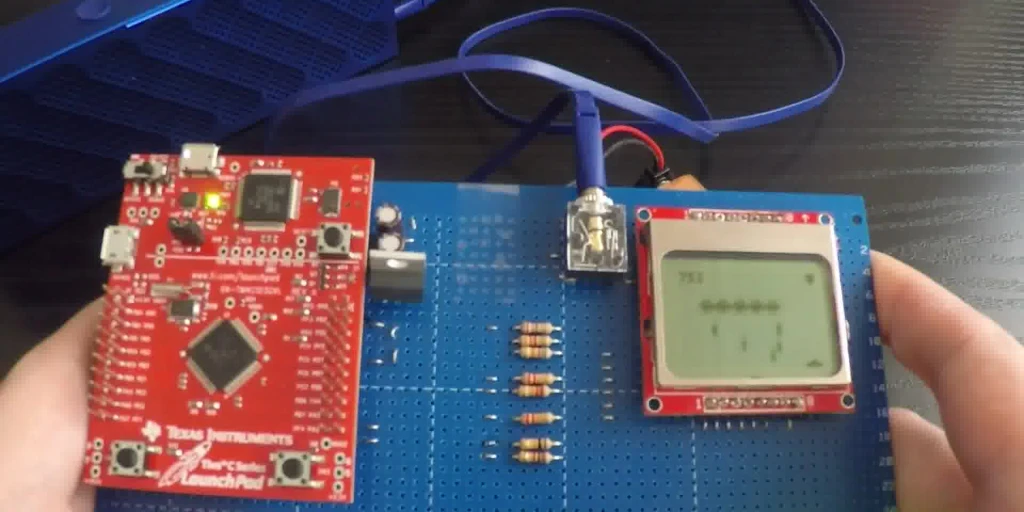

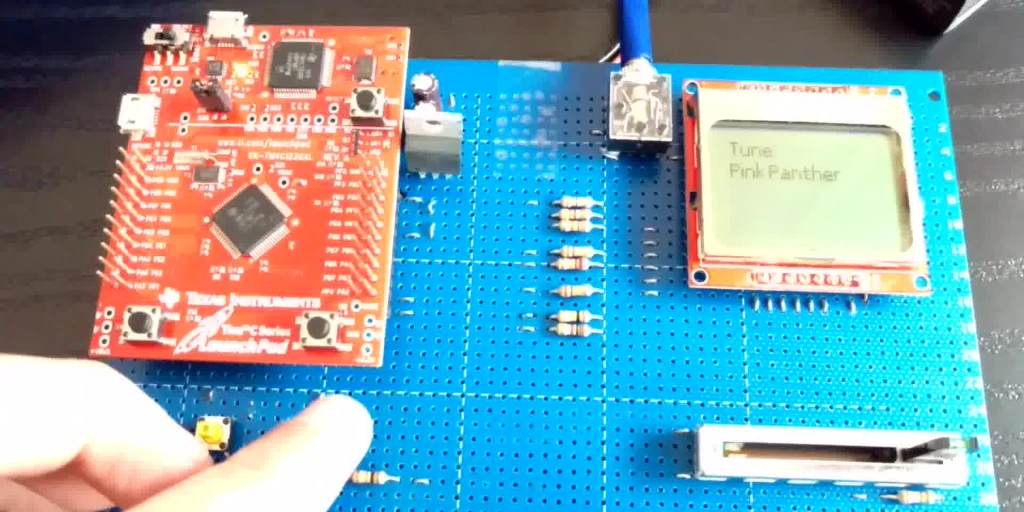

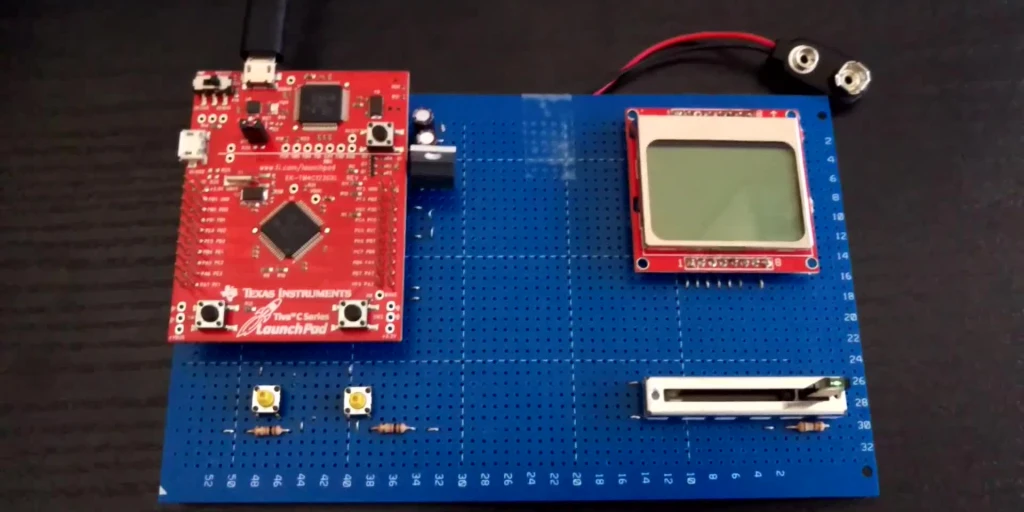

It would not be a cool game if it did not make funky noises. This post describes a way to build a sound device using a DAC and a bunch of resistors. This device will be able to play Nokia's RTTTL tunes.

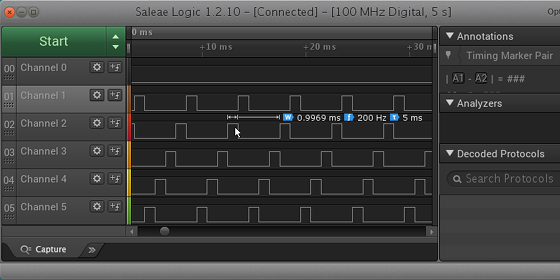

We need to handle IO to move the defender and fire at the invaders. GPIO-connected buttons will do fine to trigger the shots, and we will read out a potentiometer through an ADC to determine the defender's position. This post describes the HAL functions for that and for handling timers, which is always helpful.

Paper summary: The Linux Scheduler: a Decade of Wasted Cores by J.-P. Lozi et al. The authors found issues with the Completely Fair Scheduler on a NUMA system with 64 cores that kept cores idle while tasks were waiting to be executed. Fixing these problems resulted in a 138 times speedup in an extreme case.

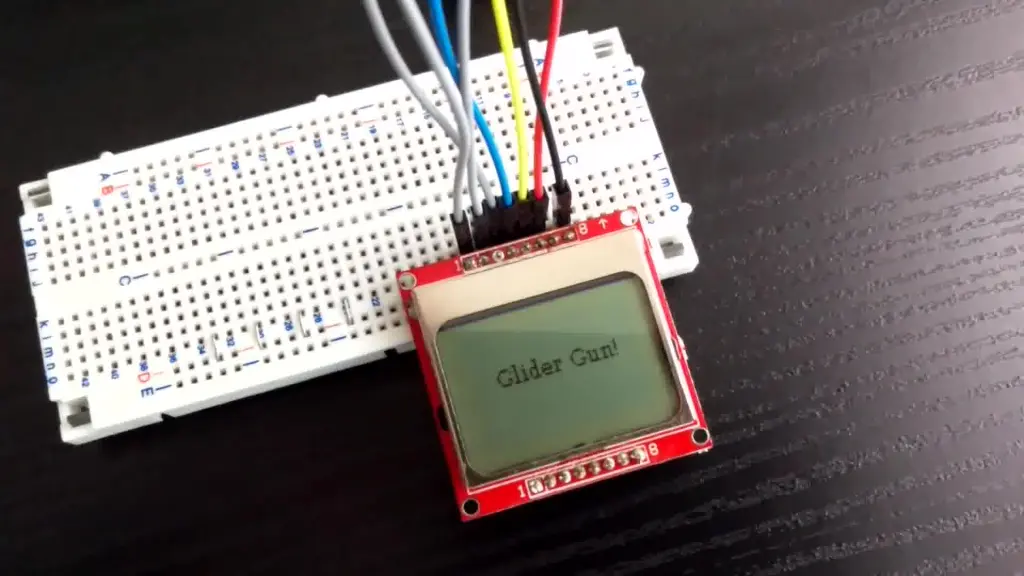

It's obvious, but Silly Invaders needs a display to display invaders. This post describes HAL calls to handle an old Nokia phone display through SSI and other things that were necessary to make it work, such as heap management.

I want my Alien Invaders to be portable and work on other microcontrollers. For that reason, the game logic will use a Hardware Abstraction Layer to communicate with the device. This post describes a stem of that layer dealing with hardware initialization and UART. Every future post will extend it with new functionality.

I have decided to build a simple Alien Invader game to learn more about microcontrollers. It will use Tiva as a base and a bunch of simple peripherals for I/O. This post describes a convenient development environment without any proprietary fluff.

Final thoughts. What's next?

What do you do when you need to signal state changes between threads? Condition variables are likely what you need. Futexes are general enough to be helpful here as well.

Chapter seven deals with read-write locks. They come in handy when many readers may access a resource mutated by relatively few writers.

Linux allows for assigning priorities to tasks and scheduling their execution according to various strategies. It has implications for locking, where the thread's priority may change after it has acquired a mutex. We deal with all that here.

Chapter five discusses cancelation. Threads may be canceled either synchronously or asynchronously. When they are canceled, they can call cleanup handlers. The process is quite involved and invokes some heavy Linux machinery.

Chapter four discusses detaching and joining threads, passing the return value to the parent, and dynamic initialization of data used concurrently by groups of threads.

In part three, we deal with synchronizing access to shared resources. As you know, bad things happen when multiple threads write to the same memory. We will implement basic mutexes using atomic operations on memory and futex syscalls for sleep management.

Typically, all the tutorials on building and debugging software for the Tiva microcontroller use Windows and clickable GUI IDEs. We can achieve the same with open source tools and eliminate all the useless fluff.

In the second chapter of the threading saga, we need to find a way to store and retrieve the pointer to the current thread by calling a function. It will be necessary for various internals and the thread-local storage. We can keep it in the Segment Register typically not used in x86_64 long mode but still taken care of during the context switch.

This is the first in a series of posts describing a threading library I built on top of Linux syscalls without Glibc. This post describes how to call syscalls, manage heap memory with custom-built malloc, and use the clone syscall to create a thread.

This blog is built using a customized version of Coleslaw - a static Common Lisp blogware. This post shares some insight into how it is done.

Set up a virtual machine with 4KB of RAM by calling magic IOCTLs on /dev/kvm and make it run a program that writes to an IO port that is then intercepted by the hypervisor.

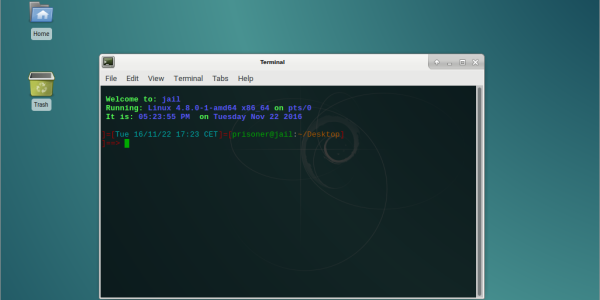

I like my computers to run Debian. Deploying it on strange devices is loads of fun. Here's how to bootstrap it on Cubox-i4Pro.