Intro

I got tired of having to wait for several hours every time I want to build TensorFlow on my Jetson board. The process got especially painful since NVIDIA removed the swap support from the kernel that came with the most recent JetPack. The swap was pretty much only used during the compilation of CUDA sources and free otherwise. Without it, I have to restrict Bazel resources to a bare minimum to avoid OOM kills when the memory usage spikes for a split second. I, therefore, decided to cross-compile TensorFlow for Jetson on a more powerful machine. As usual, it was not exactly smooth sailing, so here's a quick guide.

The toolchain and target side dependencies

First of all, you will need a compiler capable of producing binaries for the target CPU. I have initially built one from sources but then noticed that Ubuntu provides one that is suitable for the task.

]==> sudo apt-get install gcc-aarch64-linux-gnu g++-aarch64-linux-gnu

Let's see if it can indeed produce the binaries for the target:

]==> aarch64-linux-gnu-g++ hello.cxx

]==> scp a.out jetson:

]==> ssh jetson ./a.out

Hello, World!

You will also need the same version of CUDA that comes with the JetPack. You can download the repository setup file from the NVIDIA's website. I usually go for the deb (network) option. Go ahead and set that and then type:

]==> sudo apt-get install cuda-toolkit-8-0

You will need cuDNN 6, both on the build-host- and the target-side. It's quite

surprising, but both versions are necessary because the TensorFlow code

generators that need to run on the build-host depend on

libtensorflow_framework.so which seems to provide everything from string

helpers to cuDNN and cuBLAS wrappers. It's not a great design choice, but to

give them justice it's not noticeable unless you try to do weird stuff, like

cross-compiling CUDA applications. You can get the host-side version

here, and you can download the target-version from the device:

]==> export TARGET_PACKAGES=/some/empty/directory

]==> mkdir -p $TARGET_PACKAGES/cudnn/{include,lib}

]==> cd $TARGET_PACKAGES/cudnn

]==> ln -sf lib lib64

]==> scp jetson:/usr/lib/aarch64-linux-gnu/libcudnn.so.6.0.21 lib

]==> cd lib

]==> ln -sf libcudnn.so.6.0.21 libcudnn.so.6

]==> cd ..

]==> scp jetson:/usr/include/aarch64-linux-gnu/cudnn_v6.h include/cudnn.h

The next step is to install the CUDA libraries for the target. Unfortunately,

their packaging is broken. The target architecture in the metadata of the

relevant packages is marked as arm64. It makes sense on the surface because

they contain aarch64 binaries after all, but it makes them not installable. The

convention is to mark the architecture of such packages as all (universal)

because the binary shared objects they contain are only meant to be stubs for

the cross compiler (see here) and are not supposed to be runnable

on the build-host. We will, therefore, need some apt trickery to install them:

]==> sudo apt-get -o Dpkg::Options::="--force-architecture" install \

cuda-nvrtc-cross-aarch64-8-0:arm64 cuda-cusolver-cross-aarch64-8-0:arm64 \

cuda-cudart-cross-aarch64-8-0:arm64 cuda-cublas-cross-aarch64-8-0:arm64 \

cuda-cufft-cross-aarch64-8-0:arm64 cuda-curand-cross-aarch64-8-0:arm64 \

cuda-cusparse-cross-aarch64-8-0:arm64 cuda-npp-cross-aarch64-8-0:arm64 \

cuda-nvml-cross-aarch64-8-0:arm64 cuda-nvgraph-cross-aarch64-8-0:arm64

Furthermore, the names of the libraries installed by these packages are inconsistent with their equivalents for the build host, so we will need to make some symlinks in order not to confuse the TensorFlow build scripts.

]==> cd /usr/local/cuda-8.0/targets/aarch64-linux/lib

]==> for i in cublas curand cufft cusolver; do \

sudo ln -sf stubs/lib$i.so lib$i.so.8.0.61 && \

sudo ln -sf lib$i.so.8.0.61 lib$i.so.8.0 && \

sudo ln -sf lib$i.so.8.0 lib$i.so; \

done

Let's check if all this works at least in a trivial test:

]==> wget https://raw.githubusercontent.com/ljanyst/kicks-and-giggles/master/hello/hello.cu

]==> /usr/local/cuda-8.0/bin/nvcc -ccbin /usr/bin/aarch64-linux-gnu-g++ -std=c++11 \

--gpu-architecture=compute_53 --gpu-code=sm_53,compute_53 \

hello.cu

]==> scp a.out jetson:

]==> ssh jetson ./a.out

Input: 1, 2, 3, 4, 5,

Output: 2, 3, 4, 5, 6,

And with cuBLAS:

]==> wget https://raw.githubusercontent.com/ljanyst/kicks-and-giggles/master/hello/hello-cublas.cxx

]==> aarch64-linux-gnu-g++ hello-cublas.cxx \

-I /usr/local/cuda-8.0/targets/aarch64-linux/include \

-L /usr/local/cuda-8.0/targets/aarch64-linux/lib \

-lcudart -lcublas

]==> scp a.out jetson:

]==> ssh jetson ./a.out

A =

1 2 3

4 5 6

B =

7 8

9 10

11 12

A*B =

58 64

139 154

TensorFlow also needs Python headers for the target. The installation process

for these is straight-forward. You will just need to pre-define results of some

of the configuration tests in the config.site file. These tests require either

access to the dev file system of the target or need to run compiled C code, so

they cannot be executed on the build-host.

]==> wget https://www.python.org/ftp/python/3.5.2/Python-3.5.2.tar.xz

]==> tar xf Python-3.5.2.tar.xz

]==> cd Python-3.5.2

]==> cat config.site

ac_cv_file__dev_ptmx=yes

ac_cv_file__dev_ptc=no

ac_cv_buggy_getaddrinfo=no

]==> CONFIG_SITE=config.site ./configure --prefix=$TARGET_PACKAGES --enable-shared \

--host aarch64-linux-gnu --build x86_64-linux-gnu --without-ensurepip

]==> make -j12 && make install

]==> cd $TARGET_PACKAGES/include

]==> mkdir aarch64-linux-gnu

]==> cd aarch64-linux-gnu && ln -sf ../python3.5m

Bazel setup and TensorFlow mods

The way to tell Bazel about a compiler configuration is to write a CROSSTOOL file. The file is just a collection of paths to various tools and the default configuration parameters for them. There are however some things to note here as well. First, the configuration script of TensorFlow asks about the host Python installation and sets the source up to use it. However, what we need in this case is the target Python. Since there seems to be no easy way to plug that into the standard build scripts, we pass the right include directory to the compiler here:

cxx_flag: "-isystem"

cxx_flag: "__TARGET_PYTHON_INCLUDES__"We will also need to inject some compiler parameters on the fly for some of the binaries, so we call neither the build-host nor the target compiler directly:

tool_path { name: "gcc" path: "crosstool_wrapper_driver_is_not_gcc" }

...

tool_path { name: "gcc" path: "crosstool_wrapper_host_tf_framework" }As mentioned before, one of the problems is that the code generators that need

to run on the build host depend on libtensorflow_framework.so, which in turn,

depends on CUDA. We, therefore, need to let the compiler know where the host

versions of the CUDA libraries are installed. The second problem is that Bazel

fails to link the code generators against the framework library. We fix that in

the host wrapper script:

1if ofile is not None:

2 is_gen = ofile.endswith('py_wrappers_cc') or ofile.endswith('gen_cc')

3 if is_cuda == 'yes' and (ofile.endswith('libtensorflow_framework.so') or is_gen):

4 cuda_libdirs = [

5 '-L', '{}/targets/x86_64-linux/lib'.format(cuda_dir),

6 '-L', '{}/targets/x86_64-linux/lib/stubs'.format(cuda_dir),

7 '-L', '{}/lib64'.format(cudnn_dir)

8 ]

9

10 if is_gen:

11 tf_libs += [

12 '-L', 'bazel-out/host/bin/tensorflow',

13 '-ltensorflow_framework'

14 ]As far as the target is concerned, the only problem that I noticed is Bazel

failing to set up RPATH for the target version of libtensorflow_framework.so

correctly. It causes build failures of some of the binaries that depend on this

library. We fix this problem in the wrapper script for the target compiler:

ofile = GetOptionValue(sys.argv[1:], 'o')

if ofile and ofile[0].endswith('libtensorflow_framework.so'):

cpu_compiler_flags += [

'-Wl,-rpath,'+os.getcwd()+'/bazel-out/arm-py3-opt/genfiles/external/local_config_cuda/cuda/cuda/lib',

]Some adjustments need to be made to the paths where

TensorFlow looks for CUDA libraries and header files. Also, the

build_pip_package.sh script needs to be patched to make sure

to make sure that the resulting wheel file has the correct platform metadata

specified in it.

Building the CPU and the GPU packages

I have put all of the patches I mentioned above in a git repo, so you will need to check that out:

]==> git clone https://github.com/ljanyst/tensorflow.git

]==> cd tensorflow

]==> git checkout v1.5.0-cross-jetson-tx1

Let's try a CPU-only setup first. You need to configure the toolchain, and then

you can configure and compile TensorFlow as usual. Use /usr/bin/python3 for

python, use -O2 for the compilation flags and say no to everything but

jemalloc.

]==> cd third_party/toolchains/cpus/aarch64

]==> ./configure.py

]==> cd ../../../..

]==> ./configure

]==> bazel build --config=opt \

--crosstool_top=//third_party/toolchains/cpus/aarch64:toolchain \

--cpu=arm //tensorflow/tools/pip_package:build_pip_package

]==> mkdir out-cpu

]==> bazel-bin/tensorflow/tools/pip_package/build_pip_package out-cpu --platform linux_aarch64

To get GPU setup working, you will need to rerun the toolchain configuration

script and tell it the paths to the build-host side CUDA 8.0 and cuDNN 6. Then

configure TensorFlow with the same settings as above, but enable CUDA this time.

Tell it the paths to your CUDA 8.0 installation, your target-side cuDNN 6, and

specify /usr/bin/aarch64-linux-gnu-gcc as the compiler.

]==> cd third_party/toolchains/cpus/aarch64

]==> ./configure.py

]==> cd ../../../..

]==> ./configure

]==> bazel build --config=opt --config=cuda \

--crosstool_top=//third_party/toolchains/cpus/aarch64:toolchain \

--cpu=arm --compiler=cuda \

//tensorflow/tools/pip_package:build_pip_package

The compilation takes roughly 15 minutes for the CPU-only setup and 22 minutes for the CUDA setup on my Core i7 build host. It's a vast improvement comparing to hours on the Jetson board.

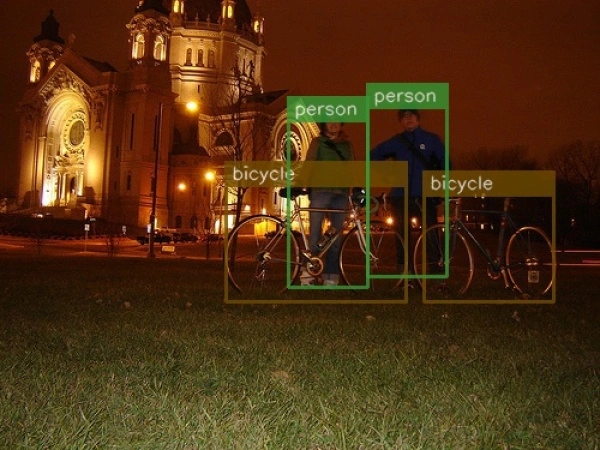

Tests

I haven't done any extensive testing, but my SSD implementation works fine and reproduces the results I get on other boxes. There is, therefore, a strong reason to believe that things compiled fine.