Intro

I had expected a smooth ride with this one, but it turned out to be quite an adventure and not one of a pleasant kind. To be fair, the likely reason why it's such a horror story is that I was bootstrapping bazel - the build software that TensorFlow uses - on an unsupported system. I spent more time figuring out the dependency issues related to that than working on TensorFlow itself. This post was initially supposed to be a rant on the Java dependency hell. However, in the end, my stubbornness took the upper hand, and I did not go to sleep until it all worked, so you have a HOWTO instead.

Prerequisites

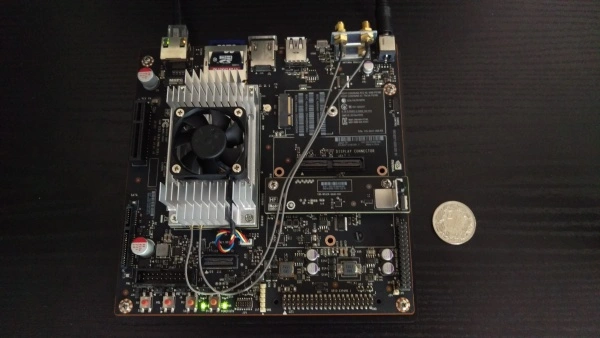

You'll need the board itself and the following installed on it:

- Jetpack 3.0

- L4T 24.2.1

- Cuda Toolkit 8.0.34-1

- cuDNN 5.1.5-1+cuda8.0

Dependencies

A Java Development Kit

First of all, you'll need a Java compiler and related utilities. Just type:

]==> sudo apt-get install default-jdk

It would not have been worth a separate paragraph, except that the version that

comes with the system messes up the CA certificates. You won't be able to

download things from GitHub without overriding SSL warnings. I fixed that by

installing ca-certificates and ca-certificates-java from

Debian.

Protocol Buffers

You'll need the exact two versions mentioned below. No other versions work down

the road. I learned about this fact the hard way. Be sure to call autogen.sh

on the master branch first - it needs to download gmock, and the link in

older tags points to the void.

]==> sudo apt-get install curl

]==> git clone https://github.com/google/protobuf.git

]==> cd protobuf

]==> ./autogen.sh

This version is needed for the gRPC Java codegen plugin.

]==> git checkout v3.0.0-beta-3

]==> ./autogen.sh

]==> ./configure --prefix=/home/ljanyst/Temp/protobuf-3.0.0-beta-3

]==> make -j12 && make install

This one is needed by Bazel itself.

]==> git checkout v3.0.0

]==> ./autogen.sh

]==> ./configure --prefix=/home/ljanyst/Temp/protobuf-3.0.0

]==> make -j12 && make install

gRPC Java

Building this one took me a horrendous amount of time. At first, I thought that

the whole package is needed. Apart from problems with the protocol buffer

versions, it has some JNI dependencies that are problematic to compile. Even

after I have successfully produced these, they had interoperability issues with

other dependencies. After some digging, it turned out that only one

component of the package is actually required, so the whole effort was

unnecessary. Of course, the source needed patching to make it build on

aarch64, but I won't bore you with that. Again, make sure you use the

v0.15.0-jetson-tx1 tag - no other tag will work.

]==> git clone https://github.com/ljanyst/grpc-java.git

]==> cd grpc-java

]==> git checkout v0.15.0-jetson-tx1

]==> echo protoc=/home/ljanyst/Temp/protobuf-3.0.0-beta-3/bin/protoc > gradle.properties

]==> CXXFLAGS=-I/home/ljanyst/Temp/protobuf-3.0.0-beta-3/include \

LDFLAGS=-L/home/ljanyst/Temp/protobuf-3.0.0-beta-3/lib \

./gradlew java_pluginExecutable

Bazel

The latest available release of Bazel (0.4.5) does not build on aarch64

without the patch listed below. I took it from the master branch.

diff --git a/src/main/java/com/google/devtools/build/lib/util/CPU.java b/src/main/java/com/google/devtools/build/lib/util/CPU.java

index 7a85c29..ff8bc86 100644

--- a/src/main/java/com/google/devtools/build/lib/util/CPU.java

+++ b/src/main/java/com/google/devtools/build/lib/util/CPU.java

@@ -25,7 +25,7 @@ public enum CPU {

X86_32("x86_32", ImmutableSet.of("i386", "i486", "i586", "i686", "i786", "x86")),

X86_64("x86_64", ImmutableSet.of("amd64", "x86_64", "x64")),

PPC("ppc", ImmutableSet.of("ppc", "ppc64", "ppc64le")),

- ARM("arm", ImmutableSet.of("arm", "armv7l")),

+ ARM("arm", ImmutableSet.of("arm", "armv7l", "aarch64")),

S390X("s390x", ImmutableSet.of("s390x", "s390")),

UNKNOWN("unknown", ImmutableSet.<String>of());

The compilation is straightforward, but make sure you point to the right version of protocol buffers and the gRPC Java compiler built earlier.

]==> git clone https://github.com/bazelbuild/bazel.git

]==> cd bazel

]==> git checkout 0.4.5

]==> export PROTOC=/home/ljanyst/Temp/protobuf-3.0.0/bin/protoc

]==> export GRPC_JAVA_PLUGIN=/home/ljanyst/Temp/grpc-java/compiler/build/exe/java_plugin/protoc-gen-grpc-java

]==> ./compile.sh

]==> export PATH=/home/ljanyst/Temp/bazel/output:$PATH

TensorFlow

- Note 22.06.2017: Go here for TensorFlow 1.2.0. See the

v1.2.0-jetson-tx1tag. - Note 03.07.2017: For TensorFlow 1.2.1, see the

v1.2.1-jetson-tx1tag. - Note 18.08.2017: For TensorFlow 1.3.0, see the

v1.3.0-jetson-tx1tag. I have tested it against JetPack 3.1 which fixes the CUDA-related bugs. Note that the kernel in this version of JetPack has been compiled without swap support, so you may want to add--local_resources=2048,0.5,0.5to the bazel commandline if you want to avoid the out-of-memory kills. - Note 21.11.2017: TensorFlow 1.4.0 builds on the Jetson without any modification. However, you will need a newer version of bazel. This is the combination of versions that worked for me:

Patches

The version of CUDA toolkit for this device is somewhat handicapped. nvcc

has problems with variadic templates and compiling some kernels using Eigen

makes it crash. I found that adding:

#define EIGEN_HAS_VARIADIC_TEMPLATES 0

to these problematic files makes the problem go away. A constructor with an initializer list seems to be an issue in one of the cases as well. Using the default constructor instead, and then initializing the array elements one by one makes things go through.

Also, the cuBLAS API seems to be incomplete. It only defines 5 GEMM algorithms

(General Matrix to Matrix Multiplication) where the newer patch releases

of the toolkit define 8. TensorFlow enumerates them by name to experimentally

determine which one is best for a given computation and the code notes that they

may fail under perfectly normal circumstances (i.e., a GPU older than sm_50).

Therefore, simply omitting the missing algorithms should be perfectly safe.

diff --git a/tensorflow/stream_executor/cuda/cuda_blas.cc b/tensorflow/stream_executor/cuda/cuda_blas.cc

index 2c650af..49c6db7 100644

--- a/tensorflow/stream_executor/cuda/cuda_blas.cc

+++ b/tensorflow/stream_executor/cuda/cuda_blas.cc

@@ -1912,8 +1912,7 @@ bool CUDABlas::GetBlasGemmAlgorithms(

#if CUDA_VERSION >= 8000

for (cublasGemmAlgo_t algo :

{CUBLAS_GEMM_DFALT, CUBLAS_GEMM_ALGO0, CUBLAS_GEMM_ALGO1,

- CUBLAS_GEMM_ALGO2, CUBLAS_GEMM_ALGO3, CUBLAS_GEMM_ALGO4,

- CUBLAS_GEMM_ALGO5, CUBLAS_GEMM_ALGO6, CUBLAS_GEMM_ALGO7}) {

+ CUBLAS_GEMM_ALGO2, CUBLAS_GEMM_ALGO3, CUBLAS_GEMM_ALGO4}) {

out_algorithms->push_back(algo);

}

#endif

See the full patches are here and here.

Memory Consumption

The compilation process may take considerable amounts of RAM - more than the

device has available. The documentation advises to use only one execution thread

(--local_resources 2048,.5,1.0 param for Bazel), so that you don't get the

OOM kills. It's unnecessary most of the time, though, because it's only the last

20% of the compilation steps when the memory is filled completely. Instead, I

used an SD card as a swap device.

]==> sudo mkswap /dev/mmcblk1p2

]==> sudo swapon /dev/mmcblk1p2

At peak times, the entire RAM and around 7.5GB of swap were used. However, only at most 5 to 6 compilation threads were in the D state (uninterruptable sleep due to IO), with 2 to 3 being runnable.

Compilation

You need to install these packages before you can proceed.

]==> sudo apt-get install python3-numpy python3-dev python3-pip

]==> sudo apt-get install python3-wheel python3-virtualenv

Then clone my repo containing the necessary patches and configure the source. I

used the system version of Python 3, located at /usr/bin/python3 with its

default library in /usr/lib/python3/dist-packages. The answers to the CUDA

related questions are:

- the version of the SDK is 8.0;

- the version of cuDNN is 5.1.5, and it's located in

/usr; - the CUDA compute capability for TX1 is 5.3.

Go ahead and run:

]==> git clone https://github.com/ljanyst/tensorflow.git

]==> cd tensorflow

]==> git checkout v1.1.0-jetson-tx1

]==> ./configure

Finally, run the compilation, and, 2 hours and change after, build the wheel:

]==> bazel build --config=opt --config=cuda --curses=no --show_task_finish \

//tensorflow/tools/pip_package:build_pip_package

]==> bazel-bin/tensorflow/tools/pip_package/build_pip_package /tmp/tensorflow_pkg

]==> cp /tmp/tensorflow_pkg/*.whl ../

I don't particularly like polluting system directories with custom-built

binaries, so I use virtualenv to handle pip-installed Python packages.

]==> mkdir -p ~/Apps/virtualenvs/tensorflow

]==> cd ~/Apps/virtualenvs/tensorflow

]==> python3 /usr/lib/python3/dist-packages/virtualenv.py -p /usr/bin/python3 .

]==> . ./bin/activate

(tensorflow) ]==> pip install ~/Temp/tensorflow-1.1.0-cp35-cp35m-linux_aarch64.whl

The device is identified correctly when starting a new TensorFlow session. You should see the following if you don't count warnings about NUMA:

Found device 0 with properties:

name: NVIDIA Tegra X1

major: 5 minor: 3 memoryClockRate (GHz) 0.072

pciBusID 0000:00:00.0

Total memory: 3.90GiB

Free memory: 2.11GiB

DMA: 0

0: Y

Creating TensorFlow device (/gpu:0) -> (device: 0, name: NVIDIA Tegra X1, pci bus id: 0000:00:00.0)

The CPU and the GPU share the memory controller, so the GPU does not have the 4GB just for itself. On the upside you can use the CUDA unified memory model without penalties (no memory copies).

Benchmark

I run two benchmarks to see if things work as expected. The first one was my TensorFlow implementation of LeNet training on and classifying the MNIST data. The training code run twice as fast on the TX1 comparing to my 4th generation Carbon X1 laptop. The second test was my slightly enlarged implementation of Sermanet applied to classifying road signs. The convolution part of the training process took roughly 20 minutes per epoch, which is a factor of two improvement over the performance of my laptop. The pipeline was implemented with a large device in mind, though, and expected 16GB of RAM. The TX1 has only 4GB, so the swap speed was a bottleneck here. Based on my observations of the processing speed of individual batches, I can speculate that a further improvement of a factor of two is possible with a properly optimized pipeline.